Administrative metadata provides information to help manage a digital object according to locally defined needs, to secure its integrity, and to enable it to be accessed and used by the designated community.

The concept of 'administrative metadata' is relatively new to the information community, outside of a few specialized fields. It came into its own starting in 2004, when several major guidelines were released that attempted to sketch out the digital library terrain. What emerged was a general consensus concerning the scope and function of administrative metadata—to support the creation, management, and use of digital resources. At the same time, though the term 'administrative'—or other words used to define administrative metadata such as 'management' or 'curation'—suggests a set of concrete activities that will be supported by this metadata, in none of the guidelines are these activities nor the concrete elements which might support them made explicit. NARA aptly sums up that "these [non-descriptive] types of metadata tend to be less standardized and more aligned with local requirements." (NARA, ''Technical Guidelines for Digitizing Archival Materials for Electronic Access'', p. 6.)

The concept has been reinforced by the rise in the use of the Metadata Encoding and Transmission Standard (METS). The METS document structure has a section dedicated to Administrative Metadata. This section "contains the administrative metadata pertaining to the digital object, its components and any original source material from which the digital object is derived" and has four discrete sub-sections:

- ''Technical Metadata <techmd>:'' information regarding creation, format, and use characteristics of the files which comprise a digital object.

- ''Intellectual Property Rights Metdata <rightsmd>:'' information about copyright and licensing pertaining to a component of the METS object.

- ''Source Metadata <sourcemd>:'' information on the source format or media of a component of the METS object such as a digital content file. It is often used for discovery, data administration, or preservation of the digital object.

- ''Digital Provenance Metadata <digiprovmd>:'' information on any preservation-related actions taken on the various files which comprise a digital object (e.g., those subsequent to the initial digitization of the files such as transformation or migrations, or, in the case of born digital materials, the files' creation). This information can then be used to judge how those processes might have altered or corrupted the object’s ability to accurately represent the original item.

All can be expressed according to any number of known metadata standards or locally produced XML schemas. However, even the METS editorial board notes, "Administrative metadata is, in many ways, a much less clear cut category of metadata than what is traditionally considered descriptive metadata. While METS does distinguish different types of administrative metadata, it is also possible to include all metadata not considered descriptive into the <amdSec> without distinguishing the types of administrative metadata further." One thing that is clear from the METS subcategories, however, is that not all non-descriptive metadata is administrative—specifically, the metadata that supports general system administration and database management functions, including: authentication and use logs; metadata on the creation, modification, deletion of metadata records; metadata on the batch interchange of records; and access and delivery logs.

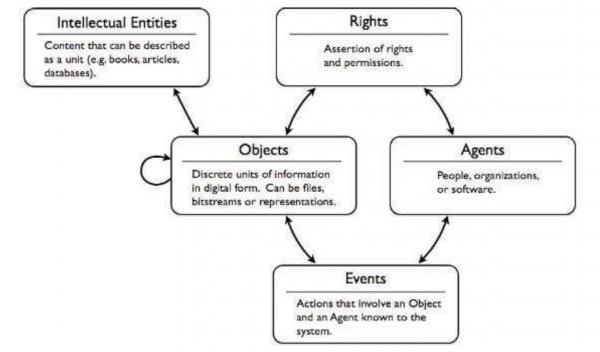

In the last few years, PREMIS (PREservation Metadata: Implementation Strategies) Data Dictionary for Preservation Metadata has emerged as the sole standard and schema to more or less address all the subcategories of Administrative Metadata listed in METS. The PREMIS model is based on four conceptual entities: Objects, Events, Agents, and Rights. In most cases, PREMIS entities align cleanly with the METS subcategories:

- PREMIS Object Entity corresponds to METS Technical Metadata;

- PREMIS Rights Entity corresponds to METS Intellectual Property Rights;

- PREMIS Event Entity corresponds to METS Digital Provenance;

- PREMIS Agent Entity has less clear correlation, though it has been suggested that it may also be included within METS Digital Provenance.

METS Source remains problematic. The METS manual lists only PREMIS as "a current source description standard". Given the stated role of the Source subcategory, however, it is not clear how the METS editorial board would apply PREMIS, and we have not been able to locate model implementations. In most cases, it is in fact the domain-specific descriptive standard which captures the media and other physical description information on the source material, though for born-digital or analog audiovisual formats, it is possible that PREMIS could offer possibilities for more granular and standardized physical description.

What is perhaps more interesting is that PREMIS markets itself as a preservation standard. This raises the question: What is the difference between administrative and preservation metadata? Preservation metadata, explain Lavoie and Gartner, has become necessary due to the nature of digital objects themselves. Unlike their analog counterparts (though magnetic tapes may be an exception to this), digital objects are technology dependent, easily mutable, and deeply bound by digital property rights. All three qualities are amplified due to the brief 'shelf life' of storage media and rapid obsolescence of technology. For this reason, preservation metadata must include the following information:

- ''Provenance:'' Who has had custody/ownership of the digital object?

- ''Authenticity:'' Is the digital object what it purports to be?

- ''Preservation activity:'' What has been done to preserve the digital object?

- ''Technical environment:'' What is needed to render and use the digital object?

- ''Rights management:'' What intellectual property rights must be observed?

(Lavoie and Gartner. ''Technology Watch Report: Preservation Metadata''. 2005.)

In essence, the purpose of preservation metadata is twofold: 1) to establish and secure the fixity, integrity, and authenticity of the digital object; and 2) to enable present and future users to access, render, and use the digital object and its intellectual content. In the terms of Open Archival Information System (OAIS) reference model, this is the same sets of information that make up the so-called representation information and preservation descriptive information at the core of the information packages submitted to, archived in, and disseminated through digital repositories.

In fact, administrative metadata as defined by METS: confronts the same problems; has the same scope; supports the same activities with the same overall purpose as preservation metadata. Both support the ingest, transformation, storage, and securing of information packages. The difference may be more a matter of emphasis, degree, and context than anything else. Administrative metadata is generally collected according to local requirements and depending on the aims of a repository at a given moment. Preservation metadata is generally collected as one of several pillars that support a more comprehensive preservation strategy, which likely entails explicit and documented policies related to: storage management; back up; transformation/migration; disaster planning; rights management; and business succession or contingency planning.

With reference to the HOPE project, this means that for the moment it is necessary to collect only the administrative metadata which supports the HOPE service’s specific functions:

- To submit, store, and make available over the medium term digital masters and/or digital derivatives;

- To ensure the fixity and integrity of objects after submission to our system;

- To deliver objects in a form that can be rendered in the online environment and understood by designated community;

- To clarify, record, and implement the access and use rights and restrictions over content;

- And to this may be added, to store information in a manner that will not preclude later preservation activities.

As the scope of the project develops, administrative metadata can eventually be extended and filled out to support a full range of preservation functions and services.

PREMIS

PREMIS (PREservation Metadata: Implementation Strategies) is an international working group established in 2003 to develop metadata for use in digital preservation. The group was "charged to define a set of semantic units that are implementation independent, practically oriented, and likely to be needed by most preservation repositories." In May 2005, PREMIS released the Data Dictionary for Preservation Metadata; Version 2.2 was released in July 2012.

The PREMIS Data Dictionary defines a core set of semantic units that repositories should know in order to perform their preservation functions. Though preservation functions can vary from one repository to another, they will generally include actions to ensure that digital objects remain viable (i.e., can be read from media) and renderable (i.e., can be displayed, played or otherwise interpreted by application software), as well as to ensure that digital objects in the repository are not inadvertently altered, and that legitimate changes to objects are documented.

It is important to note that the PREMIS Data Dictionary is not intended to lay out all possible preservation metadata elements, only those that most repositories will need to know most of the time. It is also important to note that most elements will likely already be present somewhere in a given digital repository and are collected through other repository activities or automatically supplied during the life cycle of the resource; in this sense the PREMIS Data Dictionary serves as a cross-section of existing metadata with a preservation focus. Finally, the PREMIS Data Dictionary is also implementation independent; it defines semantic units (rather than metadata elements), which may be mapped to any existing schema—though PREMIS XML offers an easy implementation. There is an expectation that when PREMIS is used for exchange it will be represented in XML.

PREMIS conformance is relatively liberal, and more important perhaps, than the few requirements for conformance are those things not required. For instance, a repository is not required to support all of the entity types defined in the PREMIS data model. It is also not required to store metadata internally using the names of PREMIS semantic units, or using values that follow PREMIS data constraints. In other words, it does not matter how a repository how a repository 'knows' a PREMIS value—by storing it with the same name or different name, by mapping from another value, by pointing to a registry, by inference, by default, or by any other means. So long as the repository can provide a good PREMIS value when required, it conforms.

Table 4-A - PREMIS Data Model Entities: Objects, Events, Agents, Rights, and Intellectual Entities. Objects and Agents exist as the two primary nodes, connected to each other through Events and Rights.

(See Administative and Technical Metadata Standards, Section on PREMIS voor more detailed information.)

In 2002-2003, the National Library of New Zealand developed their own preservation metadata schema as a working tool for the collection of preservation metadata applicable to its digital collections. Created in the wake of the release of OAIS reference model and during the flurry of work which would eventually produce PREMIS, the schema was designed to parallel the NISO.Z39.87 technical metadata standard for raster images. New Zealand soon after released the National Library of New Zealand Preservation Metadata Extract Tool, which complements this framework. Though the NLNZ standard has been all but supplanted by PREMIS, the extraction tool is still widely used. Today the NLNZ extraction tool can now generate technical metadata for PREMIS objects encoded using the PREMIS XML schema.

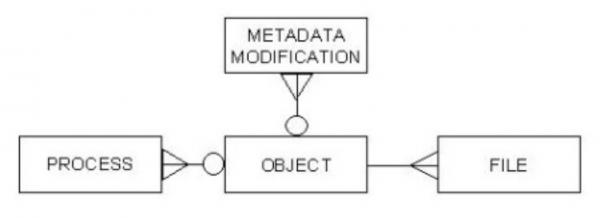

Diagram 4-B - NLNZ Data Model Entities: Object, Process, File, Metadata Modification.

(See Administative and Technical Metadata Standards, Section on NLNZ voor more detailed information.)

One of the primary differences between PREMIS and NLNZ is that the NLNZ schema is predicated on the idea of a Preservation Master. In practice this means that various other manifestations, e.g. dissemination formats, are not considered preservation objects and will not have preservation metadata retained about them. While in PREMIS each transformation produces a wholly new object with a wholly new set of object metadata, in NLNZ, preservation masters themselves are dynamic and will be subject to further preservation processes, e.g. migration from an obsolete to a current format. This creates a life cycle of creation, use and eventual replacement and object metadata is continuously modified to reflect this -in fact, Metadata Modifications are themselves considered an entity in the model. In NLNZ, at any given time there can be only one preservation master for an object and any object carrying the status of preservation master will be subject to the maximum preservation effort whilst it has that status.

A second difference is that the NLNZ model highlights the specific structural relationship between files and objects. Preservation metadata is considered to belong to the master object in a 1:1 relationship and only indirectly to the files. Processes and Metadata Modifications are associated with objects only. The following is a list of types of digital objects defined:

- ''Simple objects:'' One file intended to be viewed as a single object (e.g., a word-processed document comprising one essay).

- ''Complex objects:'' A group of dependent files intended to be viewed as a single object (e.g., a website or an object created as more than one file, such as a database), which may not function without all files being present in the right place.

- ''Object groups:'' A group of files not dependent on each other in the manner of a complex object (e.g., a group of 100 letters originally acquired on a floppy disk). This object may be broken up into (described as) 100 single objects or 4 discrete objects containing 25 letters each, or it may be kept together as a single logical object ('Joe Blogg's Letters').

In some sense, PREMIS is granularity agnostic. Many elements can apply equally to all objects: representation objects, file objects, or bitstream objects. PREMIS offers the Object Relationship semantic unit to structure these. Finally, NLNZ does not emphasize agents or rights. Rights, as such, are bypassed altogether, though the Process entity does have a 'permissions' element. Agents are folded into their respective entities but feature particularly prominently in the Process entity.

HOPE is recommending the use of PREMIS metadata elements within a METS framework. The PREMIS element set can be extended within the appropriate METS Administrative Metadata sections as needed using PREMIS extension containers. The NLNZ standard can help guide in the selection of media specific metadata from technical standards. It may also help make distinctions between masters and derivatives and between files, objects, and collections when selecting, creating, and storing preservation metadata.

Technical Metadata

Although technical metadata is only a subset of the complete suite of administrative metadata necessary to manage, secure, and provide access to digital objects, it has often been called the first line of defense. Technical metadata assures that the information content of a digital file can be resurrected even if traditional viewing applications associated with the file have vanished. Furthermore, it provides metrics that allow machines, as well as humans, to evaluate the accuracy of output from a digital file. In its entirety, technical metadata supports the management and preservation of digital content through the different stages of its life cycle.

Currently, there exists no 'out of the box' technical metadata standard suitable for all kinds of digital materials. Most available technical metadata standards were created and finalized years ago or have remained in an early ''beta'' stage-perhaps indefinitely. Only a subset of the PREMIS semantic units, those describing the Digital Object entity, can be considered technical. That being said, PREMIS can provide a core set of technical metadata to be extended by more particular media-specific standards.

Media-specific technical standards tend to be exhaustive—attempting to identify all possible elements that might characterize the digital object—as they are created for a range of environments and purposes. For this reason, the technical standards should not be used as strict guidelines but should be regarded as a set of options from which to choose. When selecting media-specific elements, it is important to consider:

- the nature of the digital collections;

- the needs and requirements of the repository's target users;

- the functions that the repository will be asked to fulfill;

- the feasibility and method of collecting and storing the metadata.

The following is a list of technical elements defined by PREMIS to describe the Digital Object entity. PREMIS limits the scope of its work to elements that would apply across all formats. These can be seen as essential elements, the collection of which should be prioritized in local workflows.

- ''objectCharacteristics:'' Technical properties of a file or bitstream that are applicable to all or most formats.

Elements: compositionLevel, fixity, size, format, creatingApplication, inhibitors, objectCharacteristicsExtension. - ''environment:'' Hardware/software combinations supporting use of the object.

Elements: environmentCharacteristic, environmentPurpose, environmentNote, dependency, software, hardware, environmentExtension. - ''signatureInformation:'' A container for PREMIS defined and externally defined digital signature information, used to authenticate the signer of an object and/or the information contained in the object.

Elements: signature, signatureInformationExtension.

PREMIS can be extended according to specific PREMIS guidelines using 'Extension' containers: objectCharacteristicsExtension, environmentExtension, signatureInformationExtension. The following are the commonly accepted standards for still images, audio, and video file formats:

- NISO Standard Z39.87: Technical Metadata for Digital Still Images

- AudioMD: Audio Technical Metadata Extension Schema

- VideoMD: Video Technical Metadata Extension Schema

(See Administative and Technical Metadata Standards, Section on Media Specific Standards for Technical Metadata voor more detailed information.)

Technical Metadata Collection and Storage Workflows

Unlike descriptive metadata, technical metadata must be collected from different sources over the entire course of an object's life cycle. Thus, robust workflows for the collection and short- and long-term storage of technical metadata are essential. When setting up technical metadata workflows, it is important to consider the following:

''Source:'' how is the metadata created and at what point can the metadata be captured?

Examples:

It is intellectual information that can only be gathered manually at the point of creation, e.g. hardware or software information.

The file itself carries the information, but it is not possible to extract it, e.g. low-level codec information.

The file itself carries this information and could be extracted with a file validation tool, or could be generated automatically, e.g. mime-type, dimension, color-depth.

''Storage:'' how should the data be stored over the short and long term?

Examples:

The data is stored in a database with other object metadata.

The data is recorded by digitization vendor in an Excel sheet or machine readable form and can be imported into a management system when needed.

The data is embedded in the file and can be extracted when needed.

The data is known by staff and can be elicited when needed.

''Basis:'' the technical metadata is applicable to the object in which version(s) or form(s)?

Examples: master, derivative, born-digital master, raster image formats, etc.

''Granularity:'' at which level should the metadata be captured?

Examples: bitstream, file, object, object group, collection.

HOPE recommends that repositories define a core set of PREMIS technical elements with media-specific extensions that can be more or less exhaustive depending on local needs and resources and the nature of the digital content. For small repositories or those not undertaking full preservation activities, a relatively light workflow is recommended to support the collection and storage of technical metadata. Institutions should focus particularly on gathering the metadata which cannot be gathered at a later point and storing it in a machine readable form. Only those elements which are needed for the daily functioning of local systems are necessary to be stored and exportable in XML form. HOPE itself currently requires little technical metadata.

Fixity

Fixity, in preservation terms, means that the digital object has not been changed between two points in time or two events. Technologies such as checksums, message digests, and digital signatures are used to verify a digital object's fixity. Fixity information, the information created by these fixity checks, provides evidence on the bit integrity of the digital objects and is thus an essential element of a trusted repository. A fixity check may be used to verify that any action taken upon the digital resource does not alter the resource. Fixity checks all work in the same basic way: a value is initially generated and saved; it is then recomputed and compared to the original to ensure that the object (file or bitstream) has not changed.

A checksum is the simplest yet least secure method of verifying fixity. Checksums are typically used in error-detection to find accidental problems in transmission and storage. The least complicated checksum algorithms do not account for such changes as the reordering of bytes or changes that cancel one another out. The more secure checksums, such as cyclic redundancy check (CRC) are hash functions that control for such changes. Because of the comparative simplicity of their mathematical algorithms, however, checksums are vulnerable to deliberate and malicious tampering.

Cyclic redundancy check (CRC): CRC is a technique for detecting errors in digital data, but not for making corrections when errors are detected. It is used primarily in data transmission. In the CRC method, a certain number of check bits, often called a checksum, are appended to the message being transmitted. The receiver can determine whether or not the check bits agree with the data, to ascertain with a certain degree of probability whether or not an error occurred in transmission. If an error occurred, the receiver sends a 'negative acknowledgement' (NAK) back to the sender, requesting that the message be retransmitted.

Unlike checksums, cryptographic hash functions such as message digests are not prone to attack. A message digest is computed by applying an algorithm to the file of any length to produce a unique, short, uniform length character string. What makes message digests more secure than checksums is the complexity of the algorithm. A message digest is like the fingerprint of a digital object. Hashes are one-way operations; a hash can be created from a digital object, but the digital object cannot be recreated from the hash. MD5 and Secure Hash Algorithm, SHA1, are commonly used cryptographic hash algorithms. The HOPE Shared Object Repository (SOR) currently uses the MD5 algorithm for ingest and routine fixity checks.

Message Digest Algorithm 5 (MD5): The MD5 algorithm takes as input a message of arbitrary length and produces as output a 128bit 'fingerprint' or 'message digest' of the input. It is conjectured that it is computationally infeasible to produce two messages having the same message digest, or to produce any message having a given pre-specified target message digest. The MD5 algorithm is intended for digital signature applications, where a large file must be 'compressed' in a secure manner before being encrypted with a private (secret) key under a public-key cryptosystem such as RSA. In essence, MD5 was a way to verify data integrity, and is much more reliable than checksum and many other commonly used methods. MD5 is a widely used algorithm and is supported by many programming APIs currently in use.

Secure Hash Algorithm (SHA1): SHA is a cryptographic message digest algorithm similar to the MD4 family of hash functions developed by Rivest. It differs in that it adds an additional expansion operation, an extra round and the whole transformation was designed to accommodate the DSS block size for efficiency. The Secure Hash Algorithm takes a message of less than 264 bits in length and produces a 160bit message digest which is designed so that it should be computationally expensive to find a text which matches a given hash.

PREMIS distinguishes fixity information from digital signatures, which are used to guarantee the authenticity of the object and are created by the document producer, submitter, or even the archive itself to connect the agent with the object. Digital signatures are unique to the signature producer, but they also relate to the content of the document—the process of creating and verifying digital signatures relies on the generation and checking of fixity values generated using a Security Hash Algorithm. Both the creator of the signature and the fixity of the document from the point that the signature was created are needed to confirm a document's authenticity.

Digital signatures combine a hash message digest with encryption. A digital signature starts with the creation of a message digest from the digital object. The message digest is then encrypted using asymmetric cryptography. Asymmetric cryptography uses a pair of keys: a private key to encrypt and a public key to decrypt. The private key must be held secretly and securely by the signer. The signature can be verified by decrypting the signature with the signer’s public key and comparing the now-decrypted digest with a new digest produced by the same algorithm from the same content. A reliable digital signature requires that:

- The process of producing a signature is considered to be unique to the producer;

- The signature is related to the content of the document that was signed;

- The signature can be recognized by others to be the signature of the person or entity that produced it.

As the PREMIS report outlines, digital signatures are used in preservation repositories in three ways. First, for submission to the repository an agent (author or submitter) might sign an object to assert that it truly is the author or submitter. Second, for dissemination from the repository the repository may sign an object to assert that it truly is the source of the dissemination. And finally, for archival storage a repository may sign an object so that it will be possible to confirm the origin and integrity of the data. In this case, the signature itself and the information needed to validate the signature must be preserved.

Fixity Workflows

General workflows for generating, storing, checking fixity information include:

- At least one but ideally more fixity values should be generated at the point of digitization or ingest. The algorithm used should always be preserved along with the fixity value.

- Each fixity check should be treated as a digital life-cycle event. Fixity checks should be tracked, for instance in audit trail or log file; information recorded should include a date/time stamp, staff member performing the check, and result of the fixity check.

- The generation of new or additional fixity values should be regarded as a digital life cycle event. Fixity value generation should be tracked, for instance as part of an audit trail or log file; information recorded should include the date/time stamp and staff member generating the value.

- Best practices within the digital preservation community regarding fixity checks should be continually reviewed. Particular attention should be paid to the use of digital signatures.

HOPE recommends that repositories support more than one common fixity algorithm/method and generate at least one fixity value for each master file; each value should be stored along with the algorithm used. Repositories should support the export of fixity values to other systems. Fixity values should be updated during life-cycle events, such as migration, transfer, or export of master files. Event information should be stored related to the generation of fixity values, fixity checks, fixity value exports, and fixity value updates. Over the long term, fixity check algorithms and methods should be monitored and updated in keeping with changes in practice.

Related Resources

Caplan, Priscilla. ''Understanding PREMIS''. Washington D.C.: Library of Congress, 2009. (https://www.loc.gov/standards/premis/understanding-premis.pdf)

Consultative Committee for Space Data Systems. ''Reference Model for an Open Archival Information System''. CCSDS 650.0-M-2 Magenta Book. Washington D.C.: NASA, 2012. (https://public.ccsds.org/pubs/650x0m2.pdf)

Gartner, Richard. "Metadata for Digital Libraries: State of the Art and Future Directions." ''JISC Technology and Standards Watch''. Bristol, U.K.: JISC, 2008. (http://www.jisc.ac.uk/media/documents/techwatch/tsw_0801pdf.pdf)

Lavoie, Brian, and Richard Gartner. ''Technology Watch Report: Preservation Metadata''. Oxford: Oxford University Library Services, 2005. (https://www.dpconline.org/docs/technology-watch-reports/88-preservation…)

Library of Congress. ''AudioMD Data Dictionary''. (http://www.loc.gov/rr/mopic/avprot/DD_AMD.html)

Library of Congress. ''VideoMD Data Dictionary''. (http://www.loc.gov/rr/mopic/avprot/DD_VMD.html)

''<METS> Metadata Encoding and Transmission Standard: Primer and Reference Manual, v1.6''. Washington D.C.: Library of Congress, 2007.

(http://www.loc.gov/standards/mets/METS Documentation final 070930 msw.pdf)

MINERVA. ''Technical Guidelines for Digital Cultural Content Creation Programmes, v1.2''. Bath, U.K.: UKOLN, 2008. (http://www.minervaeurope.org/publications/MINERVA-Technical-Guidelines-…)

National Institute of Standards and Technology (NIST). ''Federal Information Processing Standards Publication: Secure Hash Standard (SHS)''. Gaithersburg, MD: NIST, 2012. (https://ws680.nist.gov/publication/get_pdf.cfm?pub_id=910977)

National Library of New Zealand. ''Metadata Standards Framework-Preservation Metadata (Revised)''. Wellington, N.Z.: NLNZ, 2003. (http://digitalpreservation.natlib.govt.nz/assets/Uploads/nlnz-data-mode…)

Network Development and MARC Standards Office, Library of Congress. ''NISO Metadata for Images in XML Schema''. (http://www.loc.gov/standards/mix)

Network Working Group. ''RFC 1321: The MD5 MessageDigest Algorithm''. 1992. (http://www.ietf.org/rfc/rfc1321.txt)

NISO. ''NISO Standard Z39.87, Technical Metadata for Digital Still Images''. (https://groups.niso.org/apps/group_public/download.php/17936/z39-87-200…)

NISO. ''Understanding Metadata''. Bethesda, MD: NISO Press, 2004. (https://www.niso.org/publications/understanding-metadata)

Novak, Audrey (ILTS). ''Fixity Checks: Checksums, Message Digests and Digital Signature''. 2006. (http://docplayer.net/9057558-Fixity-checks-checksums-message-digests-an…)

OCLC/RLG Working Group on Preservation Metadata. Preservation Metadata and the OAIS Information Model: A Metadata Framework to Support the Preservation of Digital Objects. Dublin, OH: OCLC, 2002.

(http://www.oclc.org/research/activities/past/orprojects/pmwg/pm_framewo…)

PREMIS. ''PREMIS Data Dictionary for Preservation Metadata, v. 2.1''. Washington D.C.: Library of Congress, 2011. (http://www.loc.gov/standards/premis)

U.S. National Archives and Records Administration (NARA). ''Technical Guidelines for Digitizing Archival Materials for Electronic Access''. College Park, MD: NARA, 2004. (https://www.archives.gov/preservation/technical/guidelines.html)